Se7en Payne’s Constraint

This experiment was inspired by this post by Alan O’Leary for The Video Essay Podcast where he writes about Matt Payne’s video essay ‘Who Ever Heard….?’

For me, ‘Who Ever Heard…?’ is an example of ‘potential videography’. It offers a form that can be put to many other uses even as its formal character—its use of repetition and its ‘Cubist’ faceting of space and time—will tend to influence the thrust of the analysis performed with it (but when is that not true of a methodology?).

Alan O’Leary On Matt Payne’s ‘Who Ever Heard…?’ 2020

I wanted to see how this form might respond if I made my clip choices purely on sonic grounds. This was also another opportunity to explore the ‘malleability’ of the multi-channel soundtrack. Most video editing software will allow you to pull apart the 6 channels of a 5.1 mix and edit them separately. To me this is a fundamental sonic deformation, where the editing software allows access to the component parts of the films sonic archive (riffing extensively on Jason Mittell here).

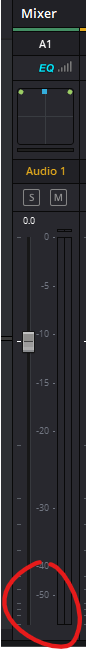

For my ‘Payne’s Constraint’ I decided to top and tail my sequence with the same image of Somerset in bed, accompanied by a different channel selection from the soundtrack each time. The opening shot uses just the surround channels which are carrying rain at this point, but on the way back round I used the front left and right channels from the mix where the sound of the city invades Somerset’s bedroom (and consciousness). The other sound selections are less intentional than this, I picked sounds that were interesting, or odd, but that also contributed to the sonic whole that was coming together. It’s worth pointing out that after I added the 4th or 5th clip Resolve refused to play back any video so, much like with the Surround Grid, this ended up being a piece which was fashioned without really understanding how it would work visually until I could render it out.

A few sonic takeaways from this;

- There is a lot of thunder in this film, but no lightning

- There is very little sky (excepting the final desert scene) but there are helicopter and plane sounds throughout

- Low oscillating sounds are prevalent in the soundtrack. Lorries/trucks and air conditioning contribute to this, but room tones also move within the soundtrack, rather than being static ambiences.

- There is music in here that I was never consciously aware of before. Here’s ‘Love Plus One’ by Haircut 100, which I found burbling in the background of the cafe scene (clip 14 in my video).