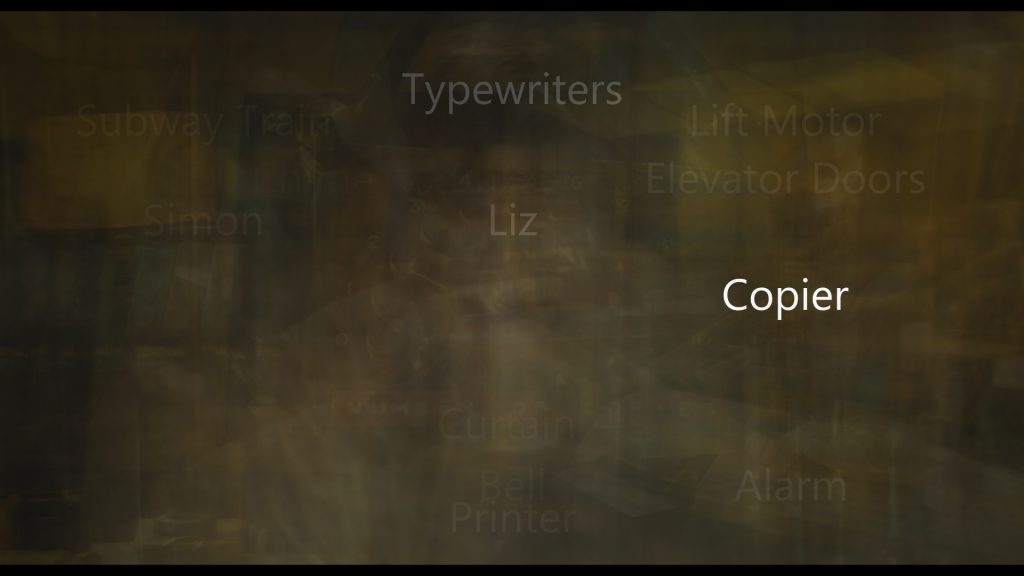

Dodge This

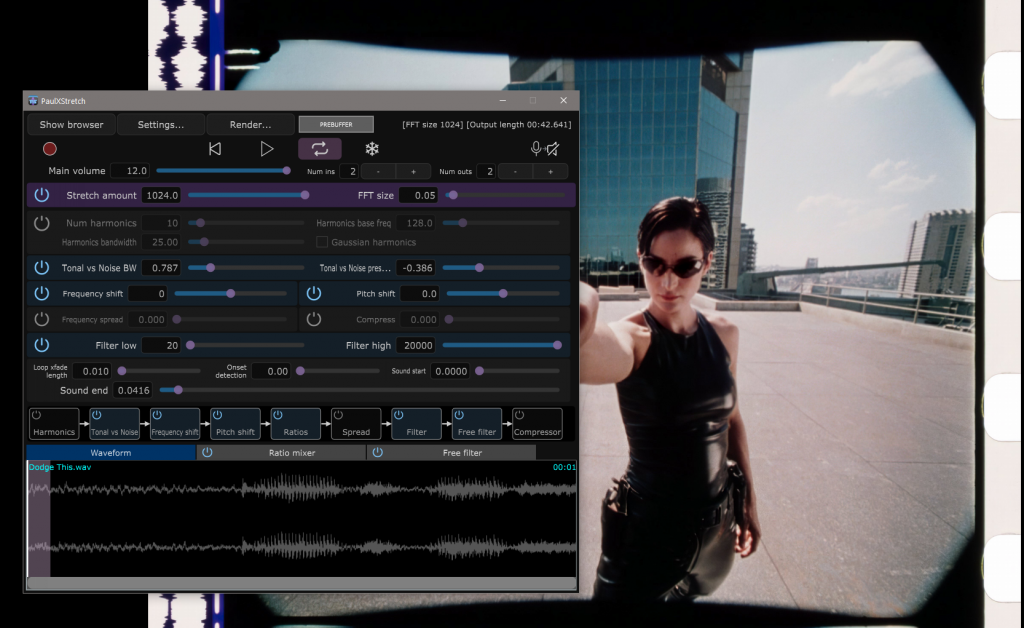

This experiment goes back to some of my earliest thinking about sound and the (still) image. This was only made possible thanks to a new version of the audio software PaulXStretch which turned up recently.

This frame scan from The Matrix I’m using here was posted on Twitter some time ago (many thanks to John Allegretti for the scan). I couldn’t resist comparing this full Super35 frame with the 2.39 : 1 aspect ratio that was extracted for the final film. (I also love seeing the waveform of the optical soundtrack on the left of the frame).

The smallest frame is a screen shot from a Youtube clip, the larger is a screen shot from the Blu Ray release. The center extraction is nice and clear, but it really highlights to me the significant amount of the frame which is unused. (See this post for some excellent input from David Mullen ASC on Super 35 and center extraction). This frame was still on my mind when I spotted that a new version of PaulXStretch was available.

PaulXStretch is designed for radical transformation of sounds. It is NOT suitable for subtle time or pitch correction. Ambient music and sound design are probably the most suitable use cases. It can turn any audio into hours or days of ambient soundscape, in an amazingly smooth and beautiful way.

https://sonosaurus.com/paulxstretch/

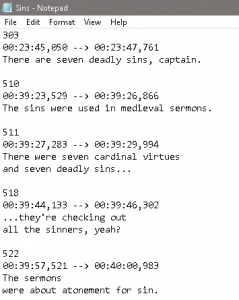

This got me thinking again about the still image; those that I’d been looking at for years in magazines, books, posters. Those that I’d fetishised over, collected, and archived. The images that meant so much to me and were central to my love of film, but that were also entirely silent. So with the help of PaulXStretch, I have taken the opportunity to bring sound back to this particular still image. The soundtrack to this video is the software iterating on the 41.66667 milliseconds of audio that accompanies this single frame from the film.

But wait, there’s more…

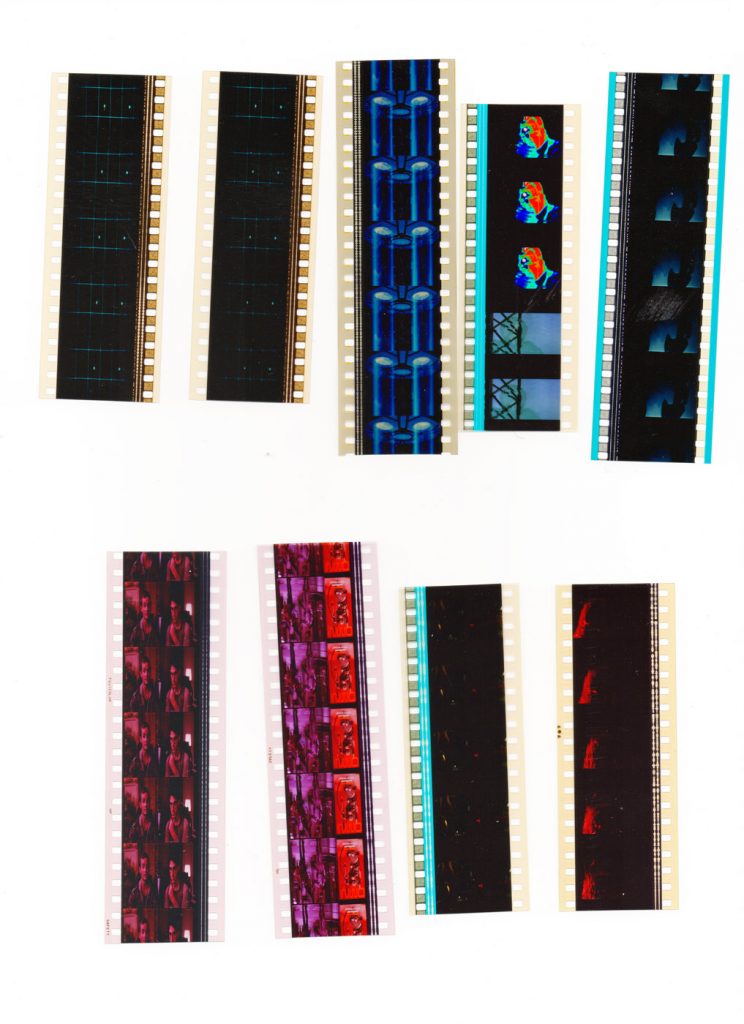

When I first had this idea, about 12 months ago, I wanted to try and accomplish it with an actual film frame. I found a piece of software (AEO-Light) which could extract the optical soundtrack information from a film frame scan, and render it to audio. So I went and bought myself some strips of film.

These are quite easy to come by on Ebay but there was a fatal flaw in my plan (which I didn’t realise until some time later). On a release print like the frames I have here, the physical layout of the projector, and specifically the projector gate, means that there is no space for the sound reader to exist in synchronous proximity to the frame as it is being projected. The optical sound reader actually lives below the projector gate, which means that the optical soundtrack is printed in advance of the picture by 21 frames (this is the SMPTE standard for the creation of release prints, but how that translates to the actual threading of a projector is a little more up in the air according to this thread). So in this material sense, where the optical soundtrack is concerned, sound and image only come into synchronisation at the very instant of projection.

If you’ve made it this far and want to know more about projector architecture then I highly recommend this video (I’ve embedded it to start at the ‘tour’ of the film path). Enjoy.