Internal Logic

This experiment uses Python code adapted from a number of sources (see below) to create a film ‘trailer’ based on the sound energy present in the films soundtrack.

In her chapter on ‘Digital Humanities‘ in The Craft of Criticism (Kackman & Kearney, 2018), Miriam Posner discusses the layers of a digital humanities project; source, processing, & presentation. With this experiment/video I’m stuck on the last one. I get the sense that there is a way to present this work that might expose the “possibilities of meaning” (Samuels & McGann, 1999) in a way which is more sympathetic to the internal logic of the deformance itself. A presentation mode (videographic or other?) which is self-contained, rather than relying on any accompanying explanation. So, more to be done with this one. Very much a WIP.

A brief note on the process

The code for this experiment is adapted from a number of Python scripts which were written to automatically create highlight reels from sports broadcasts (the first one I found used a cricket match as an example). Links to the various sources I’ve used are below.

Become a video analysis expert using python

Video and Audio Highlight Extraction Using Python

Creating Video Clips in Python

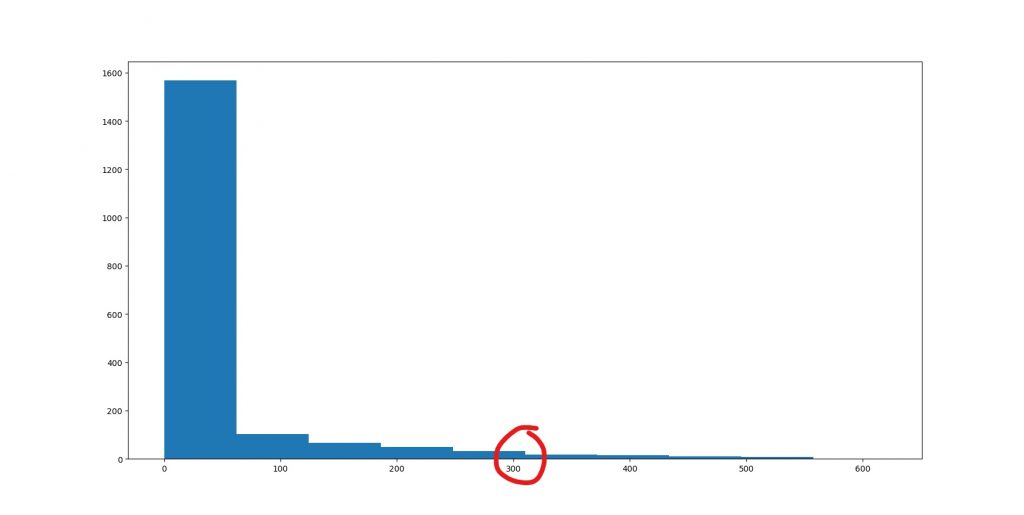

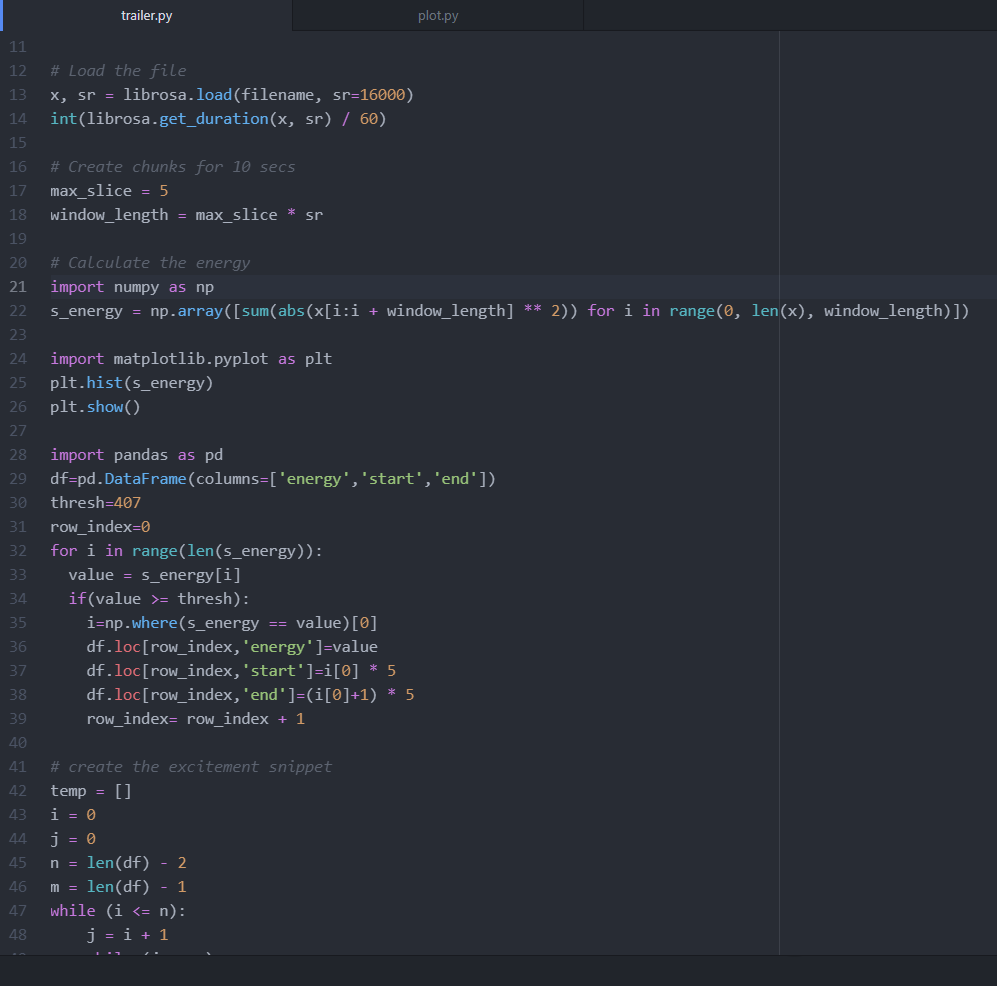

The idea is that the highlights in any sporting event will be accompanied by a rise in the ‘energy’ in the soundtrack (read as volume here for simplicity) as the crowd and commentator get louder. The Python script analyses the soundtrack in small chunks, calculating the short term energy for each. The result is a plot like this, which shows a calculation of the sound energy present in the center channel of Dune’s sound mix.

I’m not entirely clear what scale the X axis is using here for the energy (none of the blogs get into any sort of detail on this) but as the numbers increase, so does the sound energy. The Y axis is the number of sound chunks in the films sountrack with that energy (in this case I set the size of the chunks to 2 seconds). To create the ‘trailer’ I picked a threshold number based on the plot (the red circle) and the code extracted any chunks from the film that had a sound energy above this figure. Choosing the threshold is not an exact science so I tried to pick a figure which gave me a manageable amount of video to work with. A higher threshold would mean less content, a lower threshold would result in more. Note – The video above features the films full soundtrack, but the clip selections were made on energy calculations from the center channel alone.

I am not a coder!

I’m not going to share the code here. It took an age to get working (largely as a result of my coding ignorance) and I can’t guarantee that it will work for anyone else the way I’ve cobbled it together. If anyone is interested in trying it out though, please get in touch, I’m more than happy to run through it on a Zoom or something.

Kackman, M. and Kearney, M.C. eds., 2018. The craft of criticism: Critical media studies in practice. Routledge.

Kackman, Mary Celeste Kearney

Samuels, L. and McGann, J., 1999. Deformance and interpretation. New Literary History, 30(1), pp.25-56.

https://www.jstor.org/stable/20057521